At the moment it feels like, almost daily, we're seeing incredibly powerful and exciting new developments in AI and Machine Learning. Large Language Models like GPT4 are giving normal people, with no expertise in creating AI tools, brand-new opportunities to do things we thought were impossible (or at least very, very hard).

Even so - you can still run into problems where GPT doesn't do exactly what you need it to. Maybe it doesn't know all the information you need it to (maybe it's kind of niche, or maybe it's private data about your company) or you can find that you have to spend ages writing instructions to try to convince it to pay attention to certain things, answer in specific ways, or extract information the way you want.

OpenAI themselves have given talks on how to solve these problems and supercharge GPT's performance.

(Pictured above) recreation of OpenAI's techniques for maximising LLM performance.

OpenAI recommends that everyone starts in the bottom left-hand corner with "prompt engineering" before moving up, or to the right, based on specific needs. While options like "prompt engineering" have been well covered by others in the past, until now, options like RAG and fine-tuning have stayed locked behind specific technical knowledge. That means that the average person can't use these powerful options to get even more out of these world-class tools.

Not anymore! In this post, we're going to briefly explain "RAG" and "fine-tuning" and share some free tools that will let you supercharge how you use GPT, without needing any coding knowledge at all.

Getting extra data into the model with RAG (Response Augmented Generation)

A common problem when working with tools like GPT is that, while they are great at surfacing information, they might not always know the information we need them to work with.

Often the best solution to that is just "put that information in the prompt". We have some great examples of doing just that in this blog post which talks about how different members of our team use some of the latest ML tools.

The big problem is that sometimes we don't know exactly what information is the most relevant. Say we want GPT to answer a series of questions using internal data from our company. We've got a couple of options. We could;

- Copy and paste in all of the information that could be relevant (but then the model tends to miss the actual relevant information about 40% of the time).

- Pull out the most relevant information for each question (but that takes a bunch of time, and we were really hoping systems like GPT could do that sort of work for us!).

- Use the same kind of tech that powers GPT, to dynamically pull in the most relevant information each time we send a question.

(Spoiler alert - what we're offering does number 3 for you).

How do we make our information accessible to GPT?

One of the things that makes GPT so powerful is you don't have to use exact words to find the information you need. You can describe things how it makes most sense to you, or misspell things, and the system will still understand what you're talking about.

That's because, when you send a message to a tool like GPT, it doesn't actually see the words you've written. Instead, your words are converted into a big long string of numbers that represents the concept of what you wrote down.

This is the concept of "mouse" and the concept of "elephant" converted into numbers the way GPT understands them.

What's really useful about that is once words are converted into numbers you can plot them on a kind of chart. You can say, "Here are all the mouse-like words, elephant-like words are a bit further away, and right now I don't want any of that stuff - I want the sentences that are about last year's revenue".

So, basically, RAG involves;

- Converting all your important, potentially relevant, information into these numbers and saving it in a special database.

- Every time you want to ask a question to GPT you convert that question into numbers too and you check your database to see if there is any saved data with similar numbers.

- Dynamically add that data to the prompt when you ask GPT to answer your question.

Before now - that's required writing code to do advanced data pipelining, to work with specialised APIs and databases. Now you can create your own, disposable version of these RAG databases just by opening up a GSheet, pasting your important data into a table, and clicking the buttons on our free, custom, extension.

If you want to learn more - here are some videos of me explaining how the tool is useful and how to use it;

Teaching the model to behave differently with fine-tuning

While RAG helps add extra information to the model, fine-tuning helps change the way the model behaves by giving it new examples to learn from.

The idea here is more straightforward than RAG. When we're working with a tool like GPT we're basically trying to give it the right instructions so that it gives us certain outputs. The default way to do that is to write those instructions straight into the prompt - this is a big part of "prompt engineering".

Sometimes those instructions end up having to be quite long and complex, and sometimes just writing them into the prompt isn't enough. Something you've probably noticed in the past (I certainly have) is that when you're trying to get someone to do something specific, the best way to get on the same page is to give them a bunch of examples of exactly what you want.

That's the idea behind fine-tuning - instead of writing out loads of instructions, you just give a bunch of examples and you create a custom version of a GPT model that is trained on your examples on top of all of the training and knowledge that was included to begin with.

OpenAI has its own clients and has found that fine-tuned versions (even based on older models) often outperform the most cutting-edge models, just because they are better suited to the task.

Normally you would have to do coding to construct your examples, use APIs to start fine-tuning, and use a specific API to work with your fine-tuned models - but not any more!

We've created another free sheet for you where you just paste in a bunch of example questions and answers to a tab.

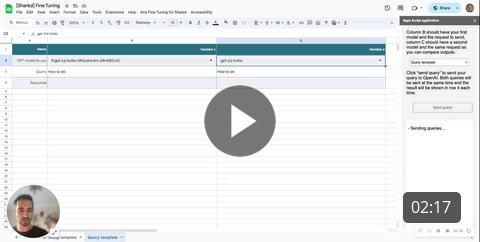

Then you press some buttons in the extension we made (pictured right) to upload your data to your own private GPT account.

The sheet will automatically start a "fine tune" job, again - in your GPT account. It'll start creating a custom-tuned version of GPT that has learned from the extra examples you've shared.

When the fine-tuning is done, you can use the same extension to send prompts to your fine-tuned model and even compare the responses you get from it to responses you've got from other models.

If you want to learn more - here are some videos of me explaining how the tool is useful and how to use it;

Conclusion

So there you have it - two free tools that will help you take your use of GPT to the next level.

At Aira, we believe that the more people have access to more tools, the more we'll see amazing and creative ideas for what to do next. We can't wait to see what you do with these!